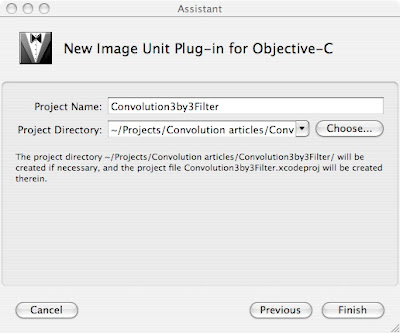

Xcode provides an easy way to get started by providing a project template for an “Image Unit Plug in for Objective-C” under “StandardApplePlugins” in the XCode project window.

When this project is created, it already contains some stub Objective-C code, which we will never touch, An example kernel which will be deleted, and some .plist and .strings files for localization. There are two types of filters, executable and non-executable filters. Non-executable filters run entirely on the GPU and therefore there is no need for any objective-C code in the filter. A nonexecutable filter is determined if all “sample” calls in the filter are of the form color = sample(someSrc, samplerCoord(someSrc)); Since the sample instructions in our filter are of the form: sample(src, (samplerCoord(src) + loc)) which does not match the nonexecutable form, we have an executable filter.

When this project is created, it already contains some stub Objective-C code, which we will never touch, An example kernel which will be deleted, and some .plist and .strings files for localization. There are two types of filters, executable and non-executable filters. Non-executable filters run entirely on the GPU and therefore there is no need for any objective-C code in the filter. A nonexecutable filter is determined if all “sample” calls in the filter are of the form color = sample(someSrc, samplerCoord(someSrc)); Since the sample instructions in our filter are of the form: sample(src, (samplerCoord(src) + loc)) which does not match the nonexecutable form, we have an executable filter.For an executable filter, only the version number, filter class and filter name are read from the Description.plist file. The other parameters need to be defined in an Objective-C subclass of CIFilter. Here's the interface to that class:

#import <QuartzCore/QuartzCore.h>

#import <Cocoa/Cocoa.h>

@interface Convolution3by3: CIFilter

{

CIImage *inputImage;

NSNumber *r00;

NSNumber *r01;

NSNumber *r02;

NSNumber *r10;

NSNumber *r11;

NSNumber *r12;

NSNumber *r20;

NSNumber *r21;

NSNumber *r22;

}

- (NSArray *)inputKeys;

- (NSDictionary *)customAttributes;

- (CIImage *)outputImage;

@end

So, we declare our input parameters and override 4 methods: init, inputKeys, customAttributes, and outputImage. The names inputImage and outputImage are defined by convention and are used with key-value coding to call your filter. Also, filter processing is done in the outputImage method so that the filter is processed lazily - results are only generated as requested. Here's the implementation of init:

#import “Convolution3by3.h“

@implementation Convolution3by3

static CIKernel *convolutionKernel = nil;

- (id)init

{

if(convolutionKernel == nil){

NSBundle *bundle =

[NSBundle bundleForClass:[self class]];

NSString *code = [NSString stringWithContentsOfFile:

[bundle pathForResource:@“Convolution3by3“

ofType:@“cikernel“]];

NSArray *kernels = [CIKernel kernelsWithString:code];

convolutionKernel = [[kernels objectAtIndex:0] retain];

}

return [super init];

}

The init method loads the cikernel code out of the bundle and loads it into the static convolutionKernel variable. It is possible to define multiple kernels in a single cikernel file if you wanted to, but I would probably keep them separate in most cases.

The inputKeys method simply returns an array of parameters for the filter:

-(NSArray *)inputKeys

{

return [NSArray arrayWithObjects:@“inputImage“,

@“r00“, @“r01“, @“r02“,

@“r10“, @“r11“, @“r12“,

@“r20“, @“r21“, @“r22“, nil];

}

By far the longest method of the Image Unit is the customAttributes method where we return a NSDictionary containing a separate NSDictionary for each of the filter parameters. Here's what it looks like:

- (NSDictionary *)customAttributes

{

NSNumber *minValue = [NSNumber numberWithFloat:-10.0];

NSNumber *maxValue = [NSNumber numberWithFloat:10.0];

NSNumber *zero = [NSNumber numberWithFloat:0.0];

NSNumber *one = [NSNumber numberWithFloat:1.0];

return [NSDictionary dictionaryWithObjectsAndKeys:

[NSDictionary dictionaryWithObjectsAndKeys:

@“NSNumber“, kCIAttributeClass,

kCIAttributeTypeScalar, kCIAttributeType,

minValue, kCIAttributeSliderMin,

maxValue, kCIAttributeSliderMax,

zero, kCIAttributeDefault, nil],

@“r00“,

...

This repeats for all 9 parameters, the only one that is different is r11, which is the center parameter. The value of this parameter defaults to 1, so that by default the filter returns its input unaltered.

...

[NSDictionary dictionaryWithObjectsAndKeys:

@“NSNumber“, kCIAttributeClass,

kCIAttributeTypeScalar, kCIAttributeType,

minValue, kCIAttributeSliderMin,

maxValue, kCIAttributeSliderMax,

zero, kCIAttributeDefault, nil],

@“r22“, nil];

}

As you can see, the attributes of the filter include minimum and maximum slider values in case you wanted to build a dynamic user interface for the filter. These constants are all defined in CIFilter.h.

The final method is the outputImage method. It is very simple:

- (CIImage *)outputImage

{

CISampler *src=[CISampler samplerWithImage:inputImage];

return [self apply: convolutionKernel, src,

r00, r01, r02,

r10, r11, r12,

r20, r21, r22, nil];

}

All we do here is get a sampler for the input image and call apply: with the kernel and all the parameters. This call returns our resulting image.

Once you've compiled this filter you can make it available to the system by placing it in the /Library/Graphics/Image Units folder, or for just your user in ~/Library/Graphics/Image Units. Next time we will see how we call this image unit from a Cocoa program.

4 comments:

Hi!

All your posts on the CoreImage-Programming were really useful for me, because I choose to hold a presentation on CoreImage and image processing at college and I'm working me through the CoreImage Programming Guide.

What really helped me, was your 2nd post on the kernel, 'cause I was trying to get a convolution running with the OpenGL language.

I'm new to the CoreImage-stuff and this is my 1st cocoa(and Mac)-project...so your posts have been a really good addition to the Apple documentation.

And if you don't mind I'll take some code of your cocoa-app as basis for mine...;-)

I've got one question: why isn't it necessary to do some clamping on the result pixel? I tried it with your code with the clamp() method, but there was no difference...

And I think there is a mistake in the last paragraph of this post. The directories are /Library/Graphics/Image Units and ~/Library/Graphics/Image Units

That's it so far.

Greetings from Germany,

Daniel

Daniel-

I'm glad you found the posts useful. I feel like I'm just getting started with this myself.

Please make use of this code in anyway you want!

As far as clamping, I don't really have an official answer for you except that I think the system can really only display values up to 1.0, so clamping is not needed. For absolute correctness you probably should do that, and I'm sure that there is somewhere out there that might have an "official" answer for you. I'm thinking that if you were going to further process the values after the convolution, then perhaps you might want to clamp().

Thanks for catching my mistake. I've corrected the post as you see. That's what I get for posting at 1AM.

Paul

Hi Paul,

have you ever tested this filter with the "Core Image Fun House"?

It loads the Image Unit without any problems and it seems to work, but not in the way you're used to it from all the other filters in the Fun House:

the sliders are not very responsive (they are really hard to slide!) and the modification takes long or is applied when you deactivate and then reactivate the filter...

Can you confirm this behaviour? I'm looking for a solution to this, but so far nothing worked out.

Thanks and greets,

Daniel

Daniel-

No, I've never tried it with Core Image Fun House, sorry.

Paul

Post a Comment